Five Takes to End 2025

2025 was a year where a lot of assumptions across the tech industry were seriously put to the test. Instead of simply making predictions or tallying wins and losses, I wanted to write out a handful of takes on what actually happened and what it says about where things are headed next.

Five Takes to End 2025My First 24 Hours with Meta's Incredible New Glasses & Muscle-Reading Wristband

Let’s just start out with this, Meta is onto something. After the first 24 hours with my very own pair of Meta Ray Ban Displays, I must say, that Apple needs to get it together. I was already a big fan of the Meta Ray Ban glasses, frequently wearing them to listen to podcasts or take photos on the …

Let’s just start out with this, Meta is onto something. After the first 24 hours with my very own pair of Meta Ray Ban Displays, I must say, that Apple needs to get it together. I was already a big fan of the Meta Ray Ban glasses, frequently wearing them to listen to podcasts or take photos on the …

Scenes From Anthropic’s Activation at Graydon Carter’s Chic West Village Shop

This Friday afternoon I swung by the West Village for a free hat. Not just any hat though, a Claude hat. Anthropic is hosting a special activation this weekend here in Manhattan at Air Mail on Hudson Street which shares its name with the online newsletter. It’s a very stereotypical unassuming West …

Scenes From Anthropic’s Activation at Graydon Carter’s Chic West Village ShopKeynotes are Bad Because Products Can’t Speak for Themselves Anymore

That Google event yesterday was very strange, from Jimmy Fallon’s general awkwardness to the uncomfortable QVC-style segment to the overuse of high profile celebrities. That’s already been discussed at length, so I won’t dwell on it. But it did get me thinking a lot about the state of product …

Keynotes are Bad Because Products Can’t Speak for Themselves AnymoreGPT-5 Pushes Vibe Coding Beyond My Wildest Dreams

I’ve spent the past 6 months learning how to vibe code both native and web applications after spending copious amounts of time figuring out the very best way for me to prompt each large language model. It’s been a busy year for me personally and I’ve spun up tons of projects that …

GPT-5 Pushes Vibe Coding Beyond My Wildest DreamsOllama's New App Makes Running Local Models Even Easier

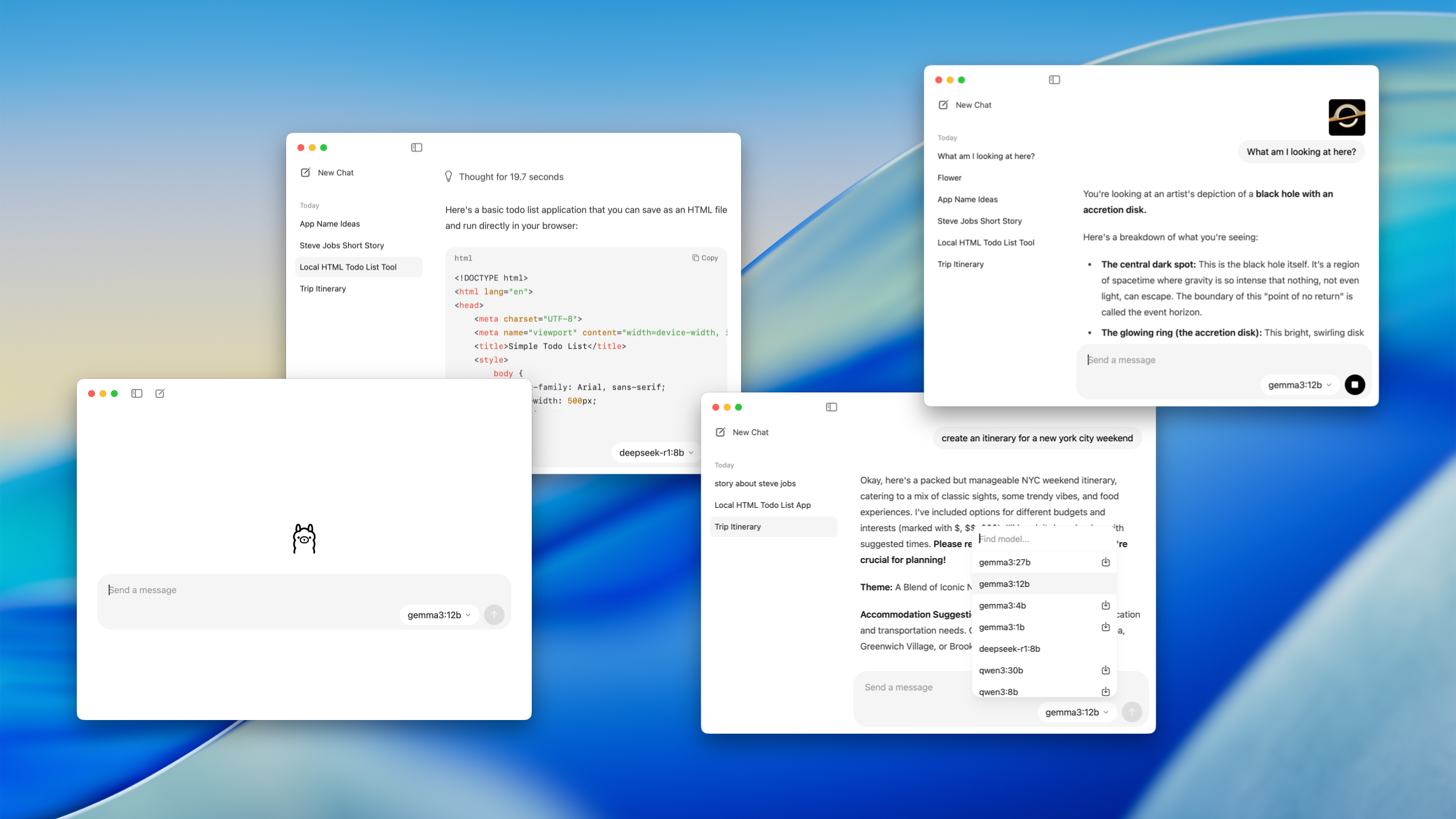

As a lifelong Mac user, I have a particular affinity for native applications. For a long time, most of the AI models were only useable through a web browser. But the model providers have slowly been rolling out fully native apps for their products. We now have native apps for ChatGPT, Claude, …

Ollama's New App Makes Running Local Models Even EasierGood Wearable AI Gadgets Are Already Here, And You Might Already Own One

We’ve all been waiting for the first good AI wearables to come along. There have been tons of attempts, from wrist-worn recording devices to pins with lasers to glasses with cameras. Company’s are throwing everything at the wall to see what might stick. Some of these gadgets have been …

Good Wearable AI Gadgets Are Already Here, And You Might Already Own OneMeta Goes on an AI Hiring Spree, What's Apple's Plan?

Apple should be the one on a hiring spree. Over the past few weeks, Mark Zuckerberg and Meta have kicked the hiring and acquisitions machine into high gear. The man knows he can’t miss out on the next platform shift and it shows. It wasn’t the metaverse and it wasn’t goggles, but …

Meta Goes on an AI Hiring Spree, What's Apple's Plan?The Curious Case of Apple and Perplexity: Do They Need Each Other?

Apparently it’s the season of acquisitions and hirings. Thankfully, this time Apple is also in on the action. According to Mark Gurman, Apple executives are in the early stages of mulling an acquisition of Perplexity. My initial reaction was “that wouldn’t work.” But I’ve taken some time to think …

The Curious Case of Apple and Perplexity: Do They Need Each Other?On Dia, Arc, and "People Don't Know What They Want Until You Show it to Them"

I was absolutely captivated by the Arc web browser from the moment I started using it a few years ago and quickly became an evangelist. It really felt like an entirely new idea for how to use the internet, it was more organized while also somehow being more fun. It became an essential part of my …

On Dia, Arc, and "People Don't Know What They Want Until You Show it to Them"“The Talk Show Live” Returns to Form—And It Was Great

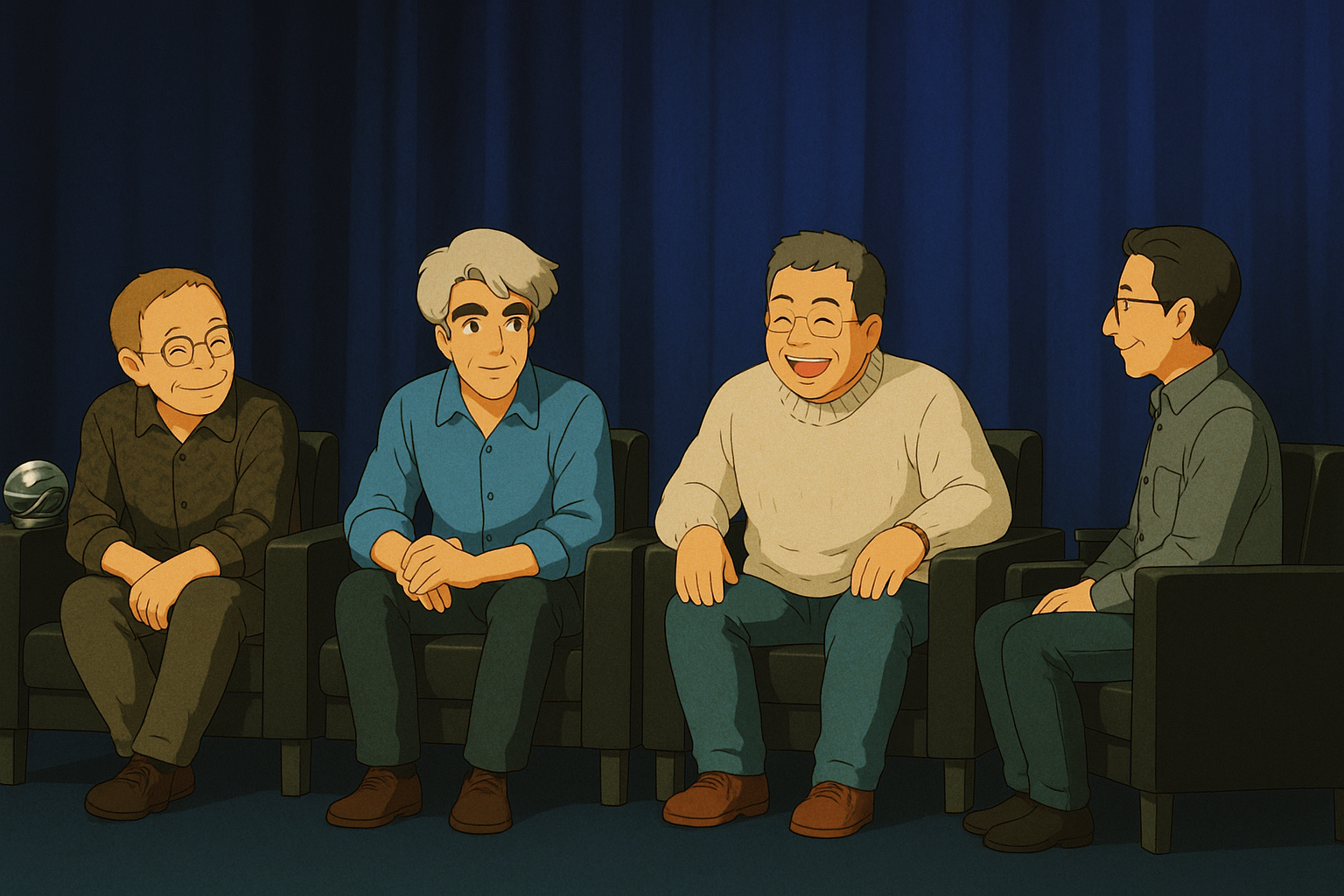

While there was a lot of consternation heading into WWDC over Apple skipping John Gruber’s live taping of The Talk Show, it might have all been for the best. Instead of sitting down with Craig and Joz, who were both unlikely to say much more than they did during the day, we got to hear a really fun …

“The Talk Show Live” Returns to Form—And It Was GreatWWDC 2025: Through Liquid Glass—No Longer Behind, Just Off to the Side

![]()

I was unusually concerned heading into this year’s WWDC, fully prepared to be disappointed. I feared that Apple would continue to be on weak footing after the Apple Intelligence failures of the past year. To my surprise, I feel very differently post-keynote. I worried that they wouldn’t acknowledge …

WWDC 2025: Through Liquid Glass—No Longer Behind, Just Off to the SideApple’s Silence at "The Talk Show" Will Speak Volumes

If you follow Apple at all, you’ve likely heard the news: Apple’s skipping John Gruber’s The Talk Show Live at WWDC this year. For a decade, it’s been tradition for Apple executives to join John on stage at the annual conference to recap the the keynote and dive deeper into the announcements. It is …

Apple’s Silence at "The Talk Show" Will Speak VolumesA Tale of Two IOs: What it Looks Like When Apple Doesn't Lead

We are at more than just an inflection point; we’re at a moment where the global technological order may be about to fundamentally change. Don’t think about this just as an “iPhone moment” but as an “Apple acquires NeXT” event. A NeXTus event if you will. When Apple acquired NeXT, it kicked …

A Tale of Two IOs: What it Looks Like When Apple Doesn't LeadRaycast for iPhone is the Launch Center Successor I've Always Wanted

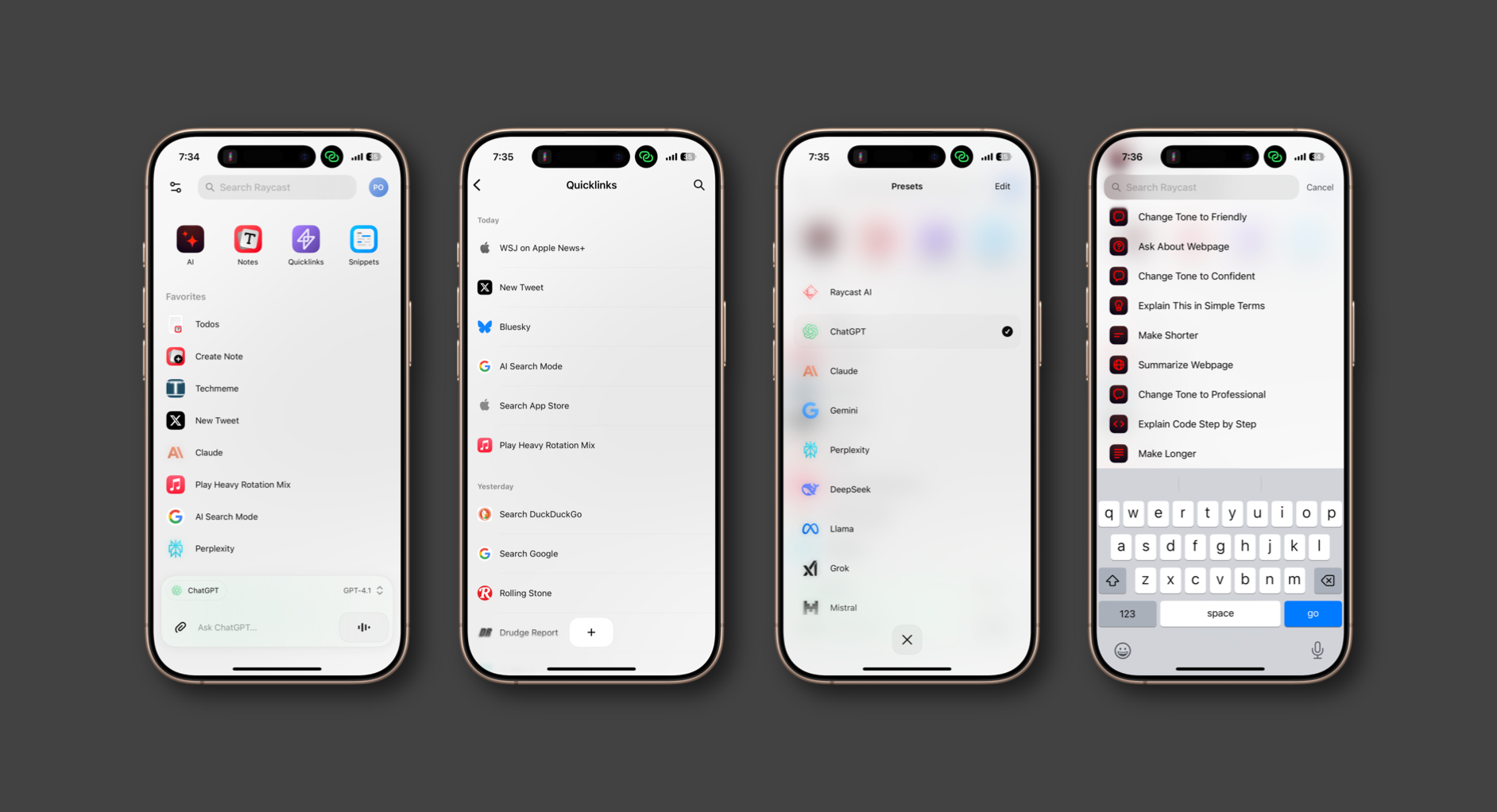

Before it was sort of abandoned I was a heavy user of Launch Center Pro. Apple enthusiasts and power users alike will almost certainly remember the powerful shortcut tool from Contrast that let you build out an extensive grid of quick ways to get things done. The app is technically still available …

Raycast for iPhone is the Launch Center Successor I've Always WantedJony Ive, Laurene Powell Jobs, and Sam Altman Walk into a Bar—Will They Walk Out with the Gadget of the Future?

The worst kept secret in Silicon Valley right now is that Jony Ive is helping Sam Altman build new gadgets. On the surface that may not seem like that much of a big deal. After all, Jony left Apple six years ago. But his career is inextricably linked to and was fundamentally shaped by Steve Jobs and …

Jony Ive, Laurene Powell Jobs, and Sam Altman Walk into a Bar—Will They Walk Out with the Gadget of the Future?The Week ChatGPT Truly Became an Assistant

Yesterday, OpenAI introduced a new feature under the radar that I think might have just changed everything. ChatGPT has had a rudimentary “memory” system for quite some time. It lets the model look for important context in your messages, like your job or a particular spot you enjoy. It then saves …

The Week ChatGPT Truly Became an AssistantCraig Saves the Day, Gives Engineers the Green Light to Use Off-the-Shelf LLMs

According to The Information, Apple is now letting engineers build products using third-party LLMs. This is a huge change that could seriously alter the course of Apple Intelligence. I have been a proponent of Apple shifting its focus from building somewhat mediocre closed first-party models to …

Craig Saves the Day, Gives Engineers the Green Light to Use Off-the-Shelf LLMsFinally, ChatGPT Can Accurately Generate Images with Text

OpenAI dropped an all-new image generation system for ChatGPT today and man is it good. One of the biggest problems with artificially generated images has been the inability to generate accurate text within them. There have historically been problems with inaccurate characters, spelling, or even …

Finally, ChatGPT Can Accurately Generate Images with TextGemini, Siri, and Default Assistants on iOS

I do not want to harp on the Siri situation, but I do have one suggestion that I think Apple should listen to. Because I suspect it is going to take quite some time for the company to get the new Siri out the door properly, they should do what was previously unthinkable. That is, open up iOS to …

Gemini, Siri, and Default Assistants on iOSVery Good News: Mike Rockwell, No Fan of Siri, is Here to Save It

Mark Gurman has just reported that Apple is reshuffling the Siri team’s leadership in light of the recent Apple Intelligence controversy. They are putting Mike Rockwell, the man behind Vision Pro, in charge of the effort and taking AI chief John Gianndrea off the project. While you can lament …

Very Good News: Mike Rockwell, No Fan of Siri, is Here to Save ItCan Apple Even Pull This Off?

I guess I should not be surprised, but I am still furious. Apple shared with John Gruber earlier today that they are delaying the launch of the flagship new personalized Siri features for Apple Intelligence that were unveiled last summer at WWDC. We have been waiting what already feels like forever …

Can Apple Even Pull This Off?A Comet Approaches Planet Browser Company

If you have followed me for a bit, you know that I really love the work that The Browser Company has done with Arc. They built a browser that is ridiculously powerful, infinitely customizable, and even extended their ambitions to mobile with one of the nicest AI search tools. But something happened …

A Comet Approaches Planet Browser CompanyA Pretty Siri Can't Hide the Ugly Truth

We’ve been waiting for Siri to get good for quite a long time. Before the emergence of ChatGPT, Siri sort of languished. Outside of the addition of Shortcuts in 2018, it has generally remained at the same level of intelligence since 2015 when they added proactive functionality. Last summer, …

A Pretty Siri Can't Hide the Ugly TruthGemini Integration Could be a Huge Upgrade Over ChatGPT in Siri

A few weeks ago Federico Viticci wrote a great piece on MacStories about how one of Gemini’s greatest strengths in the LLM competition is that it’s the only one with Google app extensions. On Friday, Apple released the first beta of iOS 18.4 and while many of the AI updates that we were …

Gemini Integration Could be a Huge Upgrade Over ChatGPT in Siri